Mastering DL - Ep1: Artificial Neural Networks(ANNs)🧐️

What are ANNs and how can they assist you in solving real-world problems? Stick around, and in the next few minutes, you'll know everything😉️

In my previous article A sneak-peak to Deep Learning🧐️, we learned what is Deep Learning & Neural Networks, so I would recommend going through it before moving forward because here I’ll be focusing on the ANN part only.

'Predicting the Future isn't magic, it's Artificial Intelligence' - Dave Waters

What are Artificial Neural Networks?🤔️

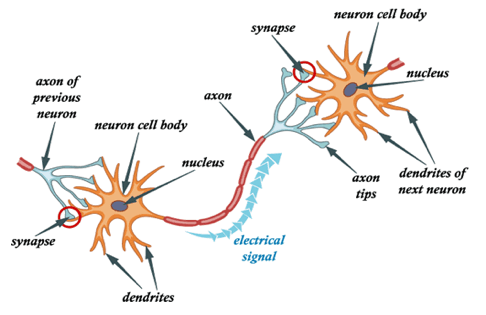

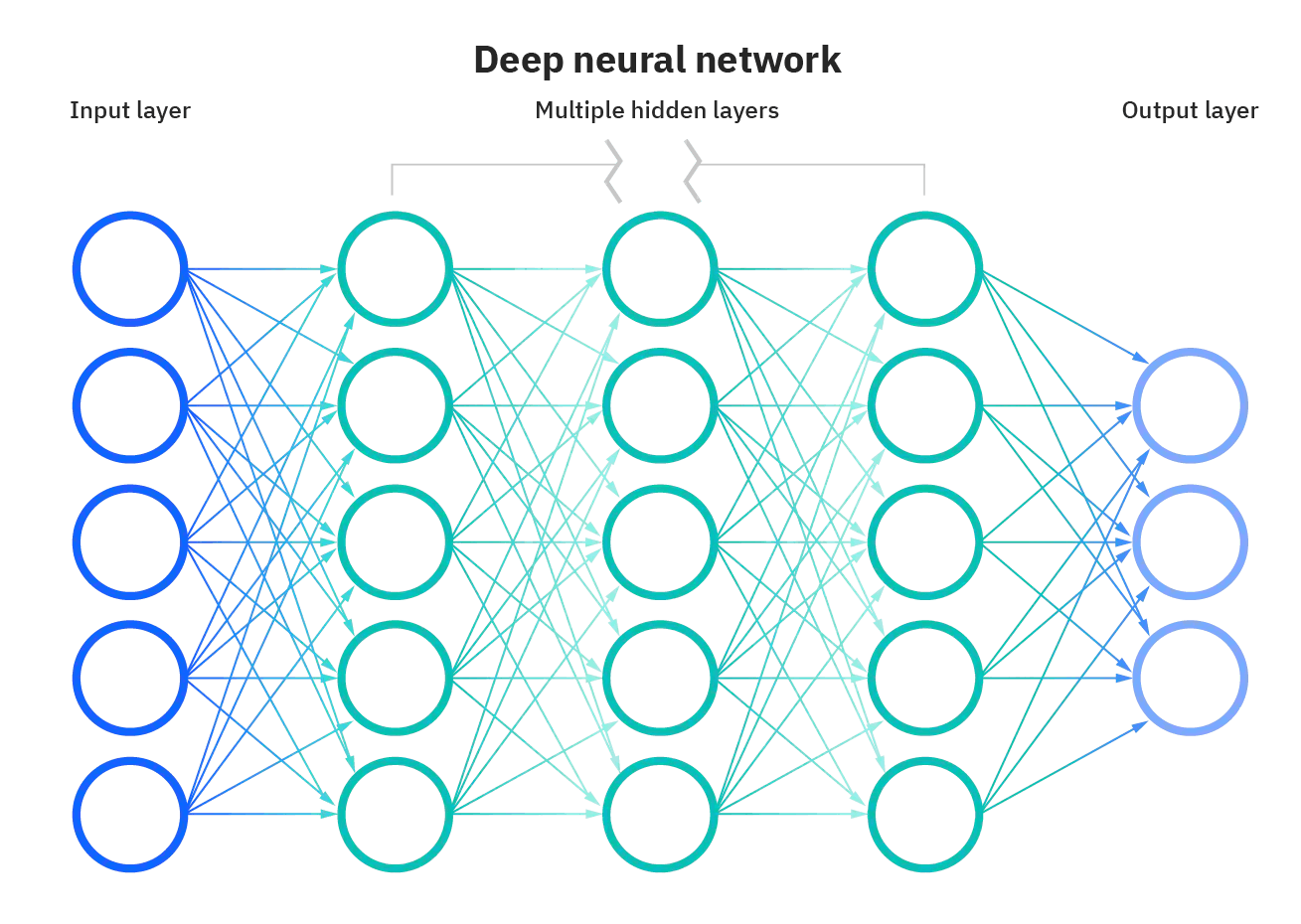

First of all, The concept of the Artificial neural network was inspired by human biology and the way neurons of the human brain function together to interpret inputs from human senses. It is exactly similar to the neurons that work in our nervous system.

ANNs have the ability to learn from the data and provide responses in the form of predictions or classifications. They are nonlinear statistical models which can interpret a complex relationship between the inputs and outputs to discover a new pattern.

These artificial neural networks can perform a range of tasks, including Image identification, Speech recognition, Machine translation, and Medical diagnosis. Because of their strong prediction capabilities, ANNs can be used to improve existing data analysis approaches.

ANNs generalize data; After learning from the initial inputs and their relationships, it can infer unseen relationships on unseen data as well, thus making the model generalize and predict on unseen data.🤩️

But How Does Artificial Neural Net Learn?😲️

The Artificial Neural Network gets the input signal in the form of a pattern and an image in the form of a vector from an external source. The notations x(n) are then used to mathematically allocate these inputs for each n number of inputs. After that, each of the inputs is multiplied by the weights assigned to it.

The strength of the link between neurons inside the network is usually represented by these weights. Within the processing unit, all of the weighted inputs are summed. If the weighted total is 0, bias is used to make the output non-zero or something else is used to scale up to the system's response.

Bias has the same input as weight, and both are equal to one. The set of transfer functions employed to achieve the desired output is referred to as the activation function.

There are several types of activation functions, but the most common are linear or non-linear sets of functions. The Binary, Linear, and Tan hyperbolic Sigmoidal activation functions are some of the most often utilized sets of activation functions.

Note: In this approach, a single input sample is passed to the neural net several times (equivalent to the number of weights) for the calculation and optimization of all weights for a single input. This is both computationally expensive and time-consuming.

Back-Propagation is Here to Save Us😎️

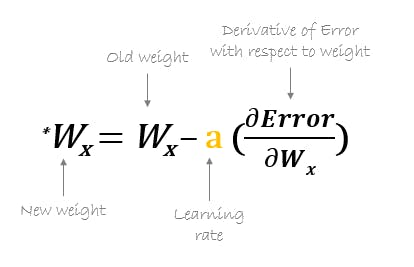

Back Propagation enables us to calculate the partial derivative w.r.t. each weight in one backward direction.

We can say, backpropagation is the process of updating and finding the optimal values of weights or other hyperparameters which helps the model to minimize the error/cost function i.e difference between the actual and predicted values.

The weights are updated with the help of optimizers. Optimizers are the mathematical formulations to update the hyperparameters of neural networks i.e weights to minimize the error.

Calculate the cost of prediction < - > Fine-tune the weights < - > Minimize the cost

Types of Artificial Neural Network🎗️

- Feed-Forward ANN

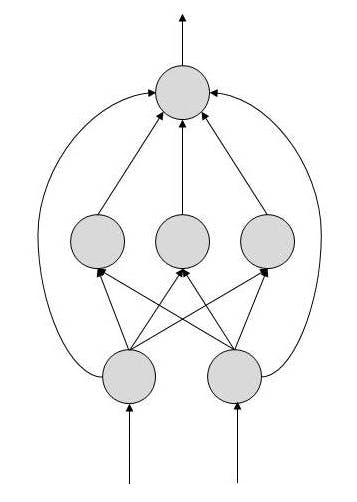

A feed-forward neural network allows information to flow only in the forward direction, from the input nodes, through the hidden layers, and to the output nodes. There are no cycles or loops in the network.

Through assessment of its output by reviewing its input, the intensity of the network can be noticed based on the group behavior of the associated neurons, and the output is decided. The primary advantage of this network is that it figures out how to evaluate and recognize input patterns.

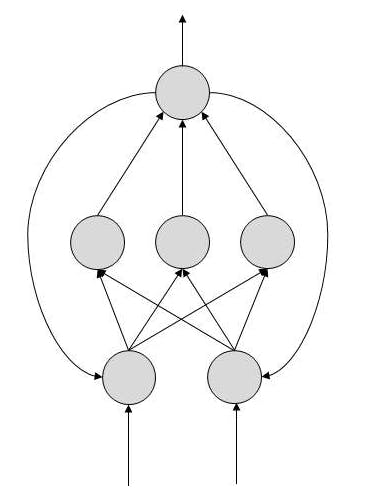

- Feedback ANN

In this type of ANN, the output returns into the network to accomplish the best-evolved results internally. The feedback networks feed information back into themselves and are well suited to solve optimization issues. The Internal system error corrections utilize feedback ANNs.

Simple ANN Implementation with Python🤓️

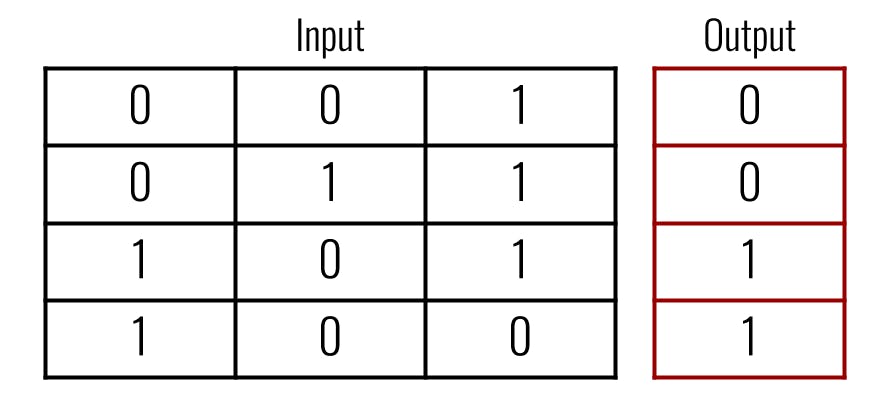

In particular, this neural net will be given an input matrix with six samples, each with three feature columns consisting of solely zeros and ones.

For example, one sample in the training set may be [0, 1, 1]. The output to each sample will be a single one or zero. The output will be determined by the number in the first feature column of the data samples.

import numpy as np # helps with the math

import matplotlib.pyplot as plt # to plot error during training

# input data

inputs = np.array([[0, 1, 0],

[0, 1, 1],

[0, 0, 0],

[1, 0, 0],

[1, 1, 1],

[1, 0, 1]])

# output data

outputs = np.array([[0], [0], [0], [1], [1], [1]])

# create NeuralNetwork class

class NeuralNetwork:

# intialize variables in class

def __init__(self, inputs, outputs):

self.inputs = inputs

self.outputs = outputs

# initialize weights as .50 for simplicity

self.weights = np.array([[.50], [.50], [.50]])

self.error_history = []

self.epoch_list = []

#activation function ==> S(x) = 1/1+e^(-x)

def sigmoid(self, x, deriv=False):

if deriv == True:

return x * (1 - x)

return 1 / (1 + np.exp(-x))

# data will flow through the neural network.

def feed_forward(self):

self.hidden = self.sigmoid(np.dot(self.inputs, self.weights))

# going backwards through the network to update weights

def backpropagation(self):

self.error = self.outputs - self.hidden

delta = self.error * self.sigmoid(self.hidden, deriv=True)

self.weights += np.dot(self.inputs.T, delta)

# train the neural net for 25,000 iterations

def train(self, epochs=25000):

for epoch in range(epochs):

# flow forward and produce an output

self.feed_forward()

# go back though the network to make corrections based on the output

self.backpropagation()

# keep track of the error history over each epoch

self.error_history.append(np.average(np.abs(self.error)))

self.epoch_list.append(epoch)

# function to predict output on new and unseen input data

def predict(self, new_input):

prediction = self.sigmoid(np.dot(new_input, self.weights))

return prediction

# create neural network

NN = NeuralNetwork(inputs, outputs)

# train neural network

NN.train()

# create two new examples to predict

example = np.array([[1, 1, 0]])

example_2 = np.array([[0, 1, 1]])

# print the predictions for both examples

print(NN.predict(example), ' - Correct: ', example[0][0])

print(NN.predict(example_2), ' - Correct: ', example_2[0][0])

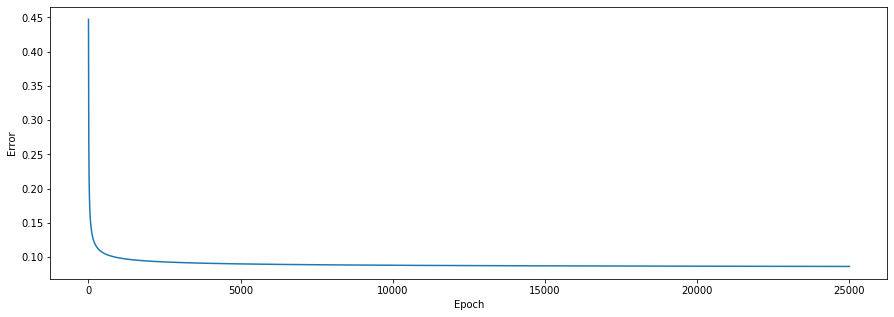

# plot the error over the entire training duration

plt.figure(figsize=(15,5))

plt.plot(NN.epoch_list, NN.error_history)

plt.xlabel('Epoch')

plt.ylabel('Error')

plt.show()

Output:

[[0.99089925]] - Correct: 1

[[0.006409]] - Correct: 0

Applications of ANN🏎️

Finally, here are some of the real-life applications of deep learning.

- Handwritten Character Recognition 🖋️

- Speech Recognition 🎤️

- Signature Classification ☦️

- Facial Recognition 👧️🧒️

- Forecasting 🌦️

Any questions, reach me out✌️

Thanks for reading this article. I hope it helped get you triggered in this fascinating field.😅️